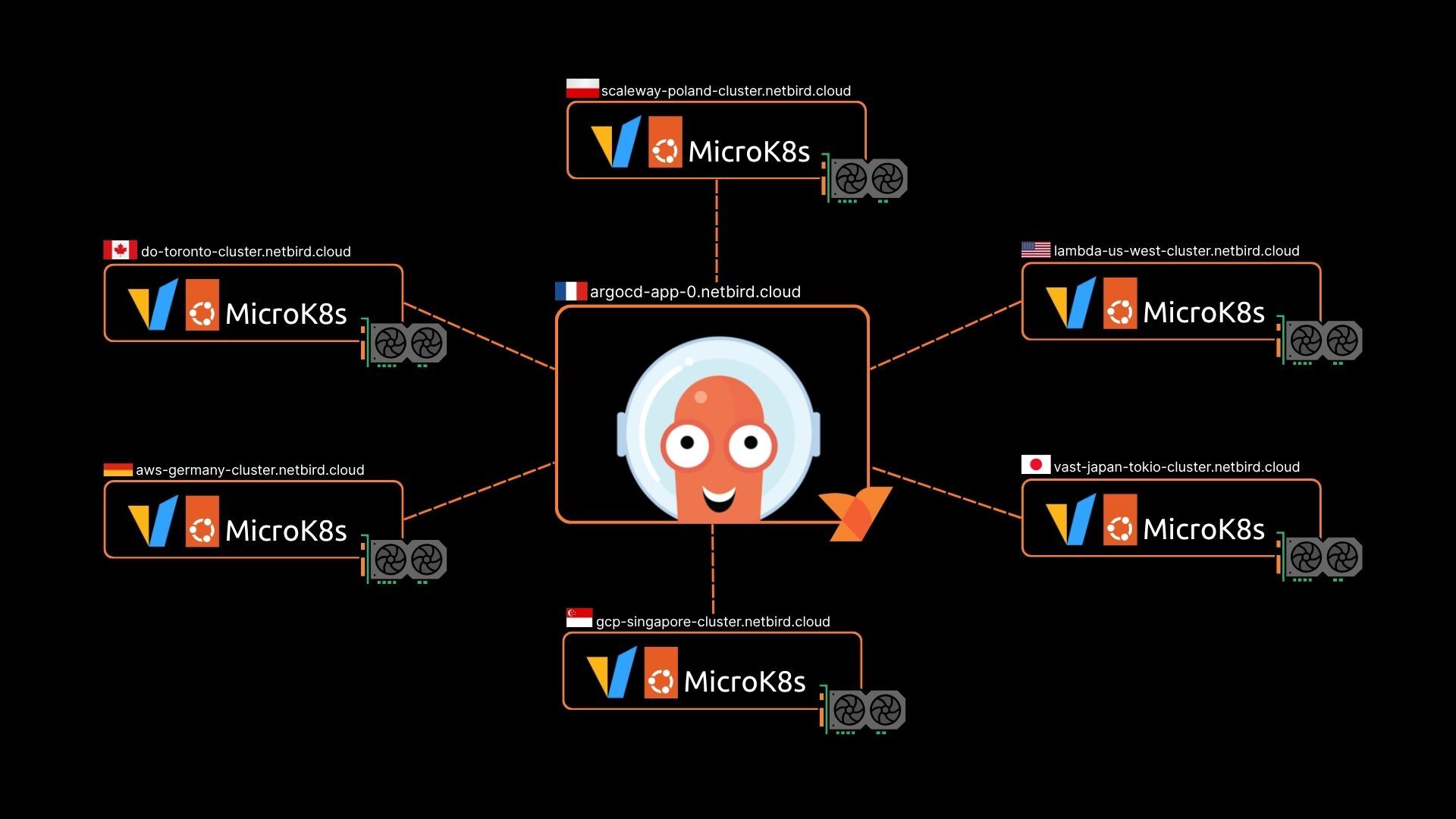

We've built what we call the "Mega Mesh"-a distributed AI inference demo infrastructure that connects GPU resources across multiple cloud providers using Argo CD, MicroK8s, vLLM, and NetBird. Rather than being locked into a single provider's GPU availability and pricing, this architecture creates a flexible, geographically distributed platform for serving large language models with simple and secure remote access for easy management.

Backstory

A while back, we had a very normal idea:

Demonstrate that multi-cloud networking isn’t complicated. That you don’t need to learn the quirks of every provider’s VPC tools, mess with firewall configs, or open random ports and hope for the best. After all, that’s what we build at NetBird - a secure, peer-to-peer networking platform that connects machines across clouds and regions as if they were on the same private LAN.

Meanwhile, the rest of the world was getting swept up in AI “excitement.” We didn't. We’re a private networking company, the kind that makes "boring" (but necessary) tasks like remote access and machine connectivity even more boring (by which we mean: simpler).

So we asked ourselves: how can we show the world that multi-cloud networking is easy - and show how it relates to the latest trends and developments of technologies? The answer, as it turned out, began with a simple question: what if we connected a few different cloud GPU providers into one big private network?

The Idea That Got Out of Hand

It started as a fun weekend project.

We figured: let’s show that secure, zero-trust connectivity across providers isn’t only possible - it’s easy. Even fun.

And then, as these things go, someone casually said, “What if we connected thirty cloud GPU providers?” We laughed - and then thought, why not? After all, that’s exactly what our technology was built to do - connecting machines at scale.

We called it the Multi-Cloud AI Mega Mesh, because when you’re connecting dozens of clouds into a single, unified overlay network, you might as well sound dramatic.

From NetBird Relays to AI Inference

When we started brainstorming what kind of use case would actually show this off, we realized we didn’t need to invent anything new just for it.

At NetBird, our relay architecture is distributed across multiple data centers and cloud providers. Relays step in when direct peer-to-peer connections aren’t possible, ensuring machines can still connect. The architecture already does something clever: it routes traffic intelligently based on geography and load. If a user in Europe connects, they’re routed to a nearby relay. U.S. traffic stays in the U.S. It’s all about minimizing latency without anyone needing to think about it.

So we thought - why not apply the same idea to AI inference for our demo?

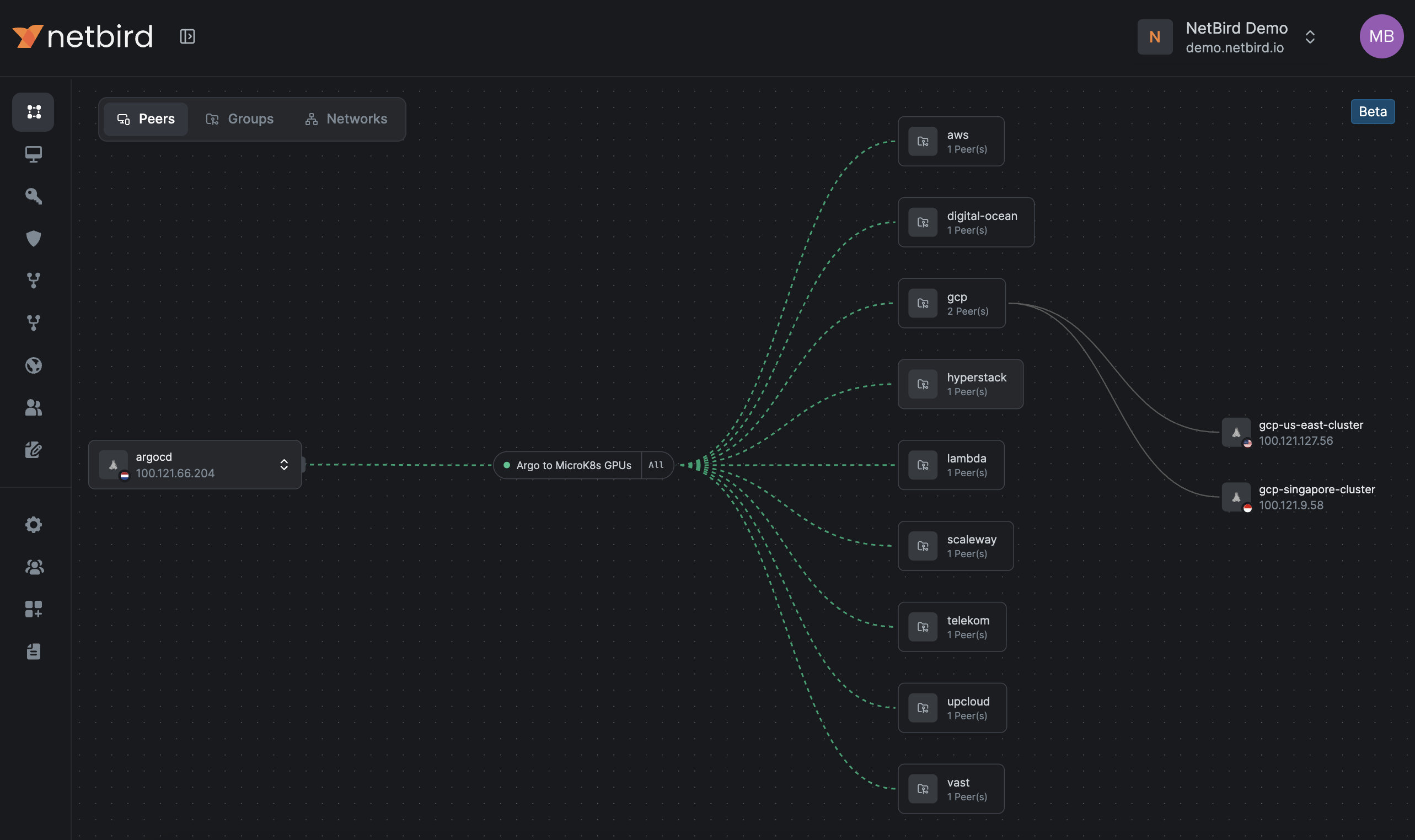

Each region runs vLLM on a MicroK8s cluster deployed with Argo CD, connected through NetBird’s peer-to-peer WireGuard network. When someone in Europe sends an inference request, it goes to a GPU node in Europe. U.S. requests land on U.S. GPUs.

It’s the same routing logic we use in our production network, just applied to serving AI workloads instead of relays.

In other words, we didn’t have to make up a new demo. We just reused the architecture we already knew worked and replaced “traffic” with “AI inference.”

The Users We Didn't Expect

When we started this project, we thought we were building a demo. A proof of concept that would showcase NetBird's capabilities at scale.

What we found instead was a user segment we'd underestimated: AI companies that don't use cloud AI services.

These aren't the companies using OpenAI's API or Anthropic's Claude. They're training their own models, running their own inference, and managing their own infrastructure. Some are in the cloud, some are on-prem, many are both. They're dealing with the same GPU scarcity everyone else faces, but they need more control than managed services provide.

Multi-cloud networking is not a new concept. Enterprises have connected multiple clouds and on-prem environments for years. But AI workloads have different characteristics than traditional enterprise applications. They're compute-intensive, latency-sensitive, and increasingly distributed across whatever infrastructure you can actually provision.

The Part That Should Have Been Hard

Connecting infrastructure across multiple cloud providers should be a nightmare. Every networking engineer knows this. You'd normally need VPN configurations for each provider, firewall and security groups rules that inevitably conflict, and someone on permanent standby to troubleshoot when region X can't talk to region Y after.

We spend more time creating accounts than connecting machines.

I'm not exaggerating for effect. The actual time breakdown looked like this: navigating 30 different signup flows, waiting for credit card verification, requesting GPU quota increases (and getting denied), filling out "tell us about your use case" forms, waiting for sales calls we didn't want, all of that took days. Connecting the machines that we did provision? Minutes per provider.

That's the insight that matters here. Multi-cloud networking has historically been complex because we've tried to solve it at the wrong layer. When you operate at the WireGuard level with a proper architecture, the underlying provider becomes nearly irrelevant.

So we did what any reasonable engineers would do: we worked with what we had. We connected nine providers, got our mesh running, and decided to demonstrate the concept anyway.

Because the surprising part, the part that actually matters, is how trivial the networking turned out to be.

And of course, once our cards are verified and sales reps have blessed our “use case,” we’ll finally achieve the dream: connecting all 30 providers in perfect harmony.

Build Your Own AI Mega Mesh

In the spirit of open source, we’ve shared the entire Mega Mesh demo setup and published a detailed guide so anyone can build on it, adapt it to their needs, and join the community pushing it forward. And for the visual learners out there, Brandon, our technology evangelist, is putting together a video walkthrough.

If you’re ready to get your hands dirty right away, create a free NetBird account and see just how easy private networking can be.